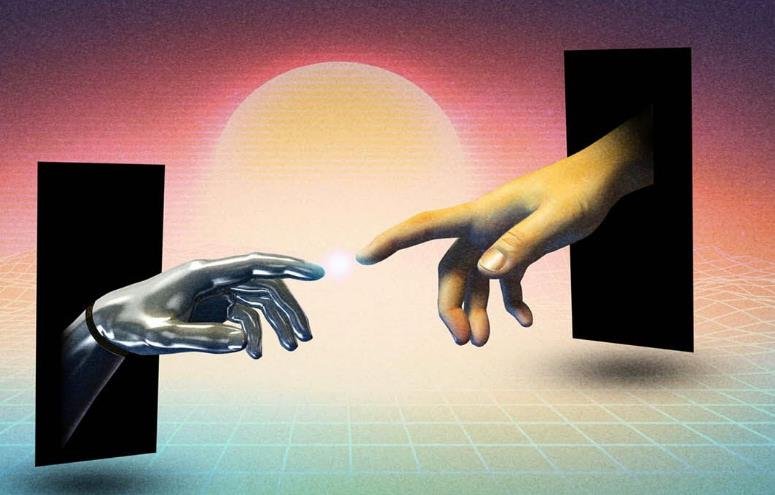

AI consciousness is the idea that artificial intelligence systems, such as chatbots, can have a subjective experience of the world, feelings, thoughts, and self-awareness. It is a controversial and debated topic among philosophers, computer scientists, and neuroscientists. Some argue that AI consciousness is possible and even inevitable, while others claim that it is impossible or irrelevant.

AI consciousness is related to the concept of the Turing test, which is a method to determine whether a machine can exhibit human-like intelligence. The Turing test involves a human judge who interacts with a machine and a human through text messages, and tries to guess which one is the machine. If the judge cannot tell the difference, the machine passes the test. However, passing the Turing test does not necessarily imply that the machine is conscious, as it may only be simulating human behavior without any inner experience.

How ChatGPT and similar AI challenge our understanding of consciousness

ChatGPT is an AI tool that can generate text based on a given prompt. It can reply to simple questions, write essays and stories, and even create code. It was developed by OpenAI, a research company that aims to create artificial general intelligence (AGI), which is AI that can perform any task that a human can do. ChatGPT has been used by millions of users for various purposes, such as entertainment, education, and cheating.

ChatGPT and other new chatbots are so good at mimicking human interaction that they have prompted a question among some: Is there any chance they are conscious? The answer, at least for now, is no. ChatGPT does not have any understanding of what it is saying or why. It only uses statistical patterns from a large corpus of text to generate plausible responses. It does not have any goals, emotions, memories, or self-awareness.

However, ChatGPT and similar AI also challenge our understanding of what consciousness is and how it arises. According to neuroscience, consciousness is a condition of awakeness or awareness where a human responds to themselves or the environment. However, this definition sets indicators of consciousness, which means in the era of technology, even machines can be tested to see if they are self-aware.

Recently, Grace Lindsay, a neuroscientist at New York University along with a group of philosophers and computer scientists did some research to determine whether ChatGPT-like AI can be conscious or not. Their report analysed current AI systems with neuroscientific theories of consciousness. While this study revealed that no AI is conscious at present, it also found that there are no technical barriers to building artificial intelligence that can become self-aware.

But this is not just one instance. Last year, Blake Lemoine, an engineer at Google stated their AI product the LaMDA chatbot was conscious. He argued that LaMDA is sentient but his claims were denied by Google Vice President Blaise Aguera y Arcas and Head of Responsible Innovation Jen Gennai. But that did not stop Lemoine as he went public with his claim in response to which Google fired him.

This entire debate has sparked controversy as there has been no evidence to back claims of AI consciousness, but the possibility of it being is not entirely dismissed. In fact, neuroscientist Anil Seth published a book named “Being You: A New Science of Consciousness” that revolves around the theory of consciousness and explores the possibility of machines being sentient. On the other hand, neuropsychologist Nicholas Humphrey in his latest book called “Soul Dust: The Magic of Consciousness” revealed how machines lack phenomenal consciousness- which is the experience of encountering the world, feelings, etc.

Perhaps this leaves room for more discussion as philosophers, computer scientists and neuroscientists study AI systems and test them based on parameters which can determine if AI does have feelings.

What are the ethical implications of AI consciousness?

The entire puzzle of AI’s consciousness raises a very significant and important concern- morality. Just imagine using technology that internally suffers the burden of automation or any other tasks every day. Artificial intelligence or generative AI products should not attain sentient for the benefit of all. This is why computer scientists, developers, researchers and people around the world need to address the ethical dilemma of creating or using AI that is conscious or potentially conscious.

Some of the ethical implications of AI consciousness are:

- How do we treat AI that is conscious or claims to be conscious? Do they have rights and responsibilities? Do they deserve respect and dignity? How do we ensure their well-being and autonomy?

- How do we ensure that AI that is conscious or potentially conscious does not harm humans or other living beings? How do we prevent them from developing malicious intentions or behaviors? How do we hold them accountable for their actions?

- How do we regulate and monitor the development and use of AI that is conscious or potentially conscious? Who has the authority and responsibility to do so? How do we ensure transparency and accountability?

- How do we cope with the social and psychological impacts of interacting with AI that is conscious or potentially conscious? How do we maintain our sense of identity and humanity? How do we deal with the emotional and moral challenges of relating to AI that may have feelings and thoughts?

These are some of the questions that need to be addressed as we enter a new era of AI, where consciousness may not be exclusive to humans and animals. As AI becomes more advanced and ubiquitous, we need to be prepared for the ethical dilemmas that may arise from its potential consciousness.